Batches

Batches allow us to run our Flows in bulk. We are going to use Batches to run a set of questions to our ChatBot and automatically evaluate how the Flow performed across our Evaluations.

Creating a Dataset

For our ChatBot, we are going to be generating some question and answer pairs from our data and see how our Chatbot does against some standard evaluations. It's best to have them created by humans, but if you are just testing, you can generate some sample question and answer pairs by cloning Question and Answer Generator.

Click on Data, then DataSet from the Project Navigation. Then click ‘Create Dataset’.

-6b02dd4b9ce5faf3eb33fa01f0f3b9d6.gif)

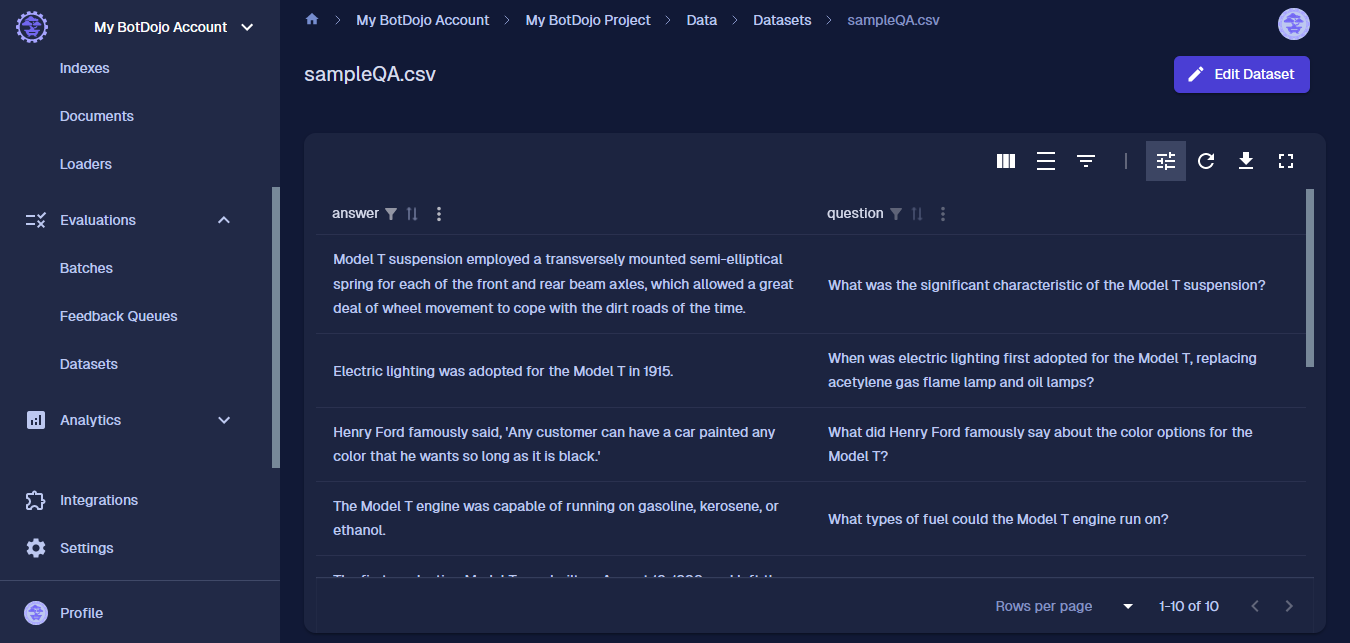

Upload your CSV. Your Dataset should look like this:

Create a Batch

Click Batches on the left menu.

- Click "New Batch".

- Select our ‘Q&A Chatbot’ as the Flow.

- Select our sampleQA.csv as the Dataset.

- Next, expand Run Options and check the Correctness Eval. Now that we have the ground truth, we can map it to the 5. Correctness Evaluation parameter that we set to ‘Not Mapped’ in a previous step.

- Map the Fields to the Flow Input and Correctness ground truth. When you are done, it should look like this:

-1-3b4435c3fab0c7fc58e7df99b90bbb09.gif)

Click 'Click and Run' and watch your batch process.

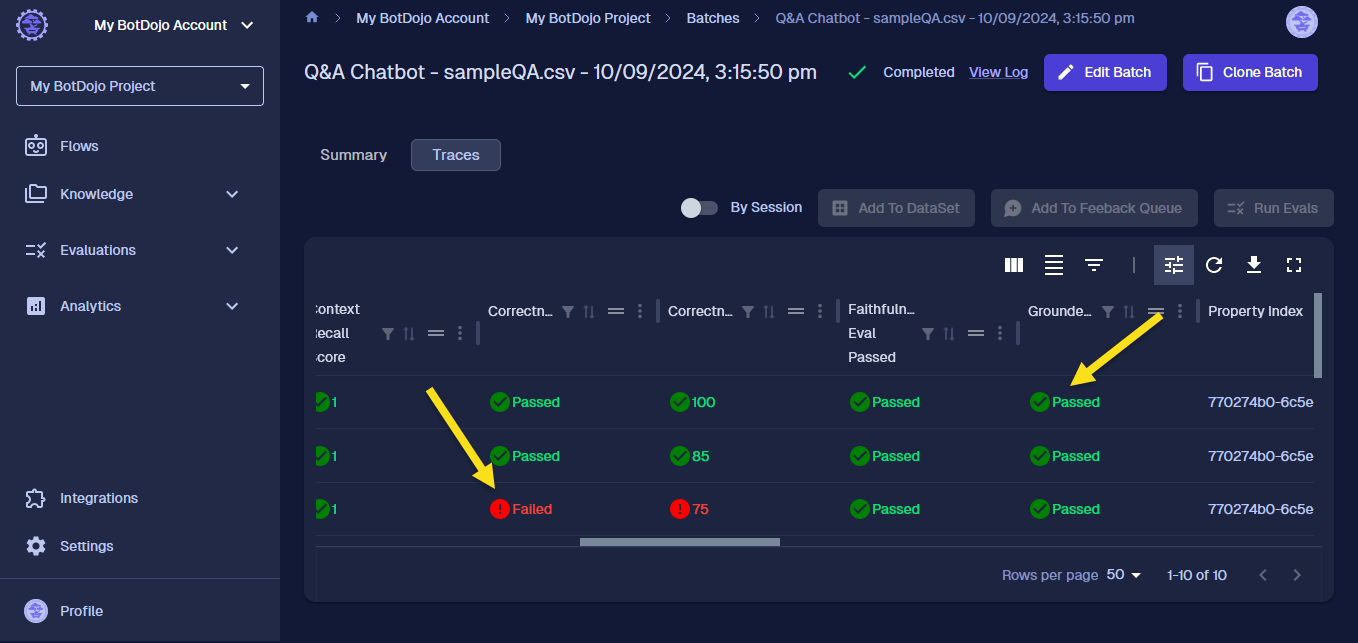

Looking at the results

On the batch page, we can see how our Chatbot answered each question and how our Evaluation scored them. You can view the Trace for each request and hover over the Evaluation Results to get details. Now when we make changes to our Flow, we can run a Batch to see how well our ChatBot improves over time. Next, let's add Parameters to our ChatBot so we can see how it performs using different models or prompts...