LLM Nodes

Overview of LLM Nodes

LLM Nodes are a core building block for creating applications with Large Language Models (LLMs) in BotDojo. These nodes allow you to define the specific LLM provider and model to be used, as well as the prompt and any additional parameters required for generating responses.

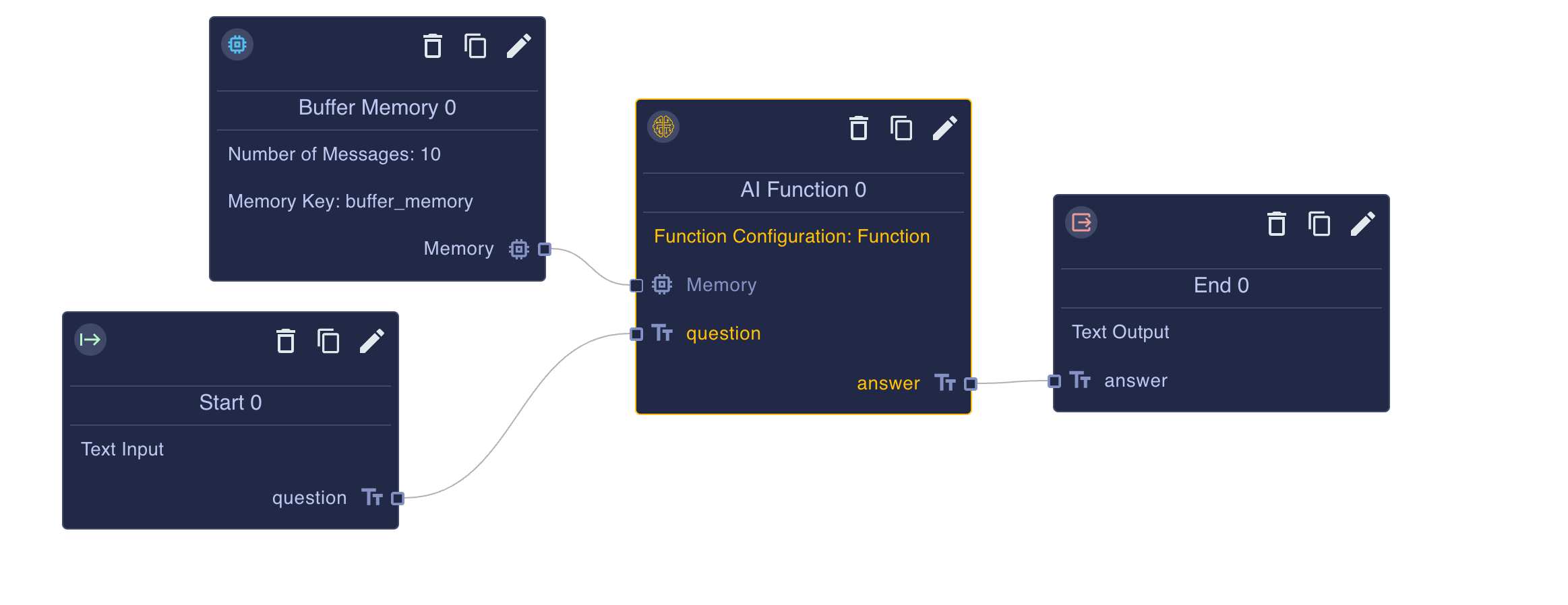

LLM Nodes are highly flexible and can be connected to other nodes in your application flow, such as Start and End Nodes, to create powerful conversational AI experiences.

Configuring an LLM Node

To configure an LLM Node, simply click on the Edit icon on the node. This will open the Node editor in the right side panel, which provides an interface similar to OpenAI's playground.

In the Flow Designer editor, you can:

- Drag the LLM Node to your Flow

-039cf61bdeb755ed66b780593458bebb.gif)

- Choose the LLM provider and model to use (e.g., Open AI GPT-3.5 Turbo, GPT-4, Grog Llama)

-9d056bc3fb1f0c24a15875411ed71759.gif)

- Test it out

Click on the image below to clone an example to your project.

LLM Settings

The LLM Node provides several settings to customize the behavior of the LLM:

- Provider: Select the LLM provider you want to use (e.g., OpenAI, Anthropic, Cohere).

- Model: Choose the specific model from the selected provider (e.g., GPT-3.5 Turbo, GPT-4, Claude).

- Temperature: Control the randomness of the generated text. Lower values make the output more focused and deterministic, while higher values make it more diverse and creative. Range: 0 to 1.

- Max Tokens: Set the maximum number of tokens (words or word pieces) in the generated response. This helps control the length of the output. By default, this is null, and the LLM will determine when to stop.

- Top P: An alternative to temperature, Top P sampling selects from the smallest possible set of tokens whose cumulative probability exceeds the probability

p. Range: 0 to 1. - Frequency Penalty: Adjust the model's likelihood to repeat the same line verbatim. Positive values penalize new tokens based on their existing frequency, decreasing the model's likelihood to repeat the same line verbatim.

- Stop On: Define a list of strings where the API will stop generating further tokens if it encounters any of them. This is useful for preventing the model from generating unwanted or irrelevant content.

- Stream to Chat:

This property determines if the results of the LLM will stream to the user in a Chat Interface (like Slack or Microsoft Teams). This helps with the user experience because you get instant feedback to questions.

- Default: By default, if an LLM or AI Agent Node is connected to the end node, the results will stream to the user; otherwise, they will not.

- Always: Always stream results to the chat interface.

- Never: Never stream results to the chat interface.

By configuring these settings, you can fine-tune the behavior of the LLM Node to suit your specific use case and requirements.

Prompts Messages

The prompt is the main input to the LLM and consists of a series of messages that provide context and instructions for generating a response. BotDojo supports three types of prompt messages:

- System: Provides high-level instructions or context for the LLM. This is typically used to set the tone, persona, or behavior of the model.

- Assistant: Represents the model's previous responses in the conversation. This helps maintain context and coherence throughout the interaction.

- User: Contains the user's input or query that the model should respond to.

- Chat History: This is a special placeholder where Memory is used to provide context of the conversation. To use Memory add a Buffer Memory node to your flow and hook it up to your LLM

Input Schema

The Input Schema defines the structure of the data that can be inserted into the prompt messages using Handlebars Template Language. This allows you to dynamically populate the prompt with relevant information from previous nodes or user input. You can modify the Input Schema by clicking the Edit Icon on the Node and selecting the Input Schema tab.

BotDojo uses JSON Schema to define the inputs and outputs to an LLM. You can either specify a schema directly or use the UI to define it.

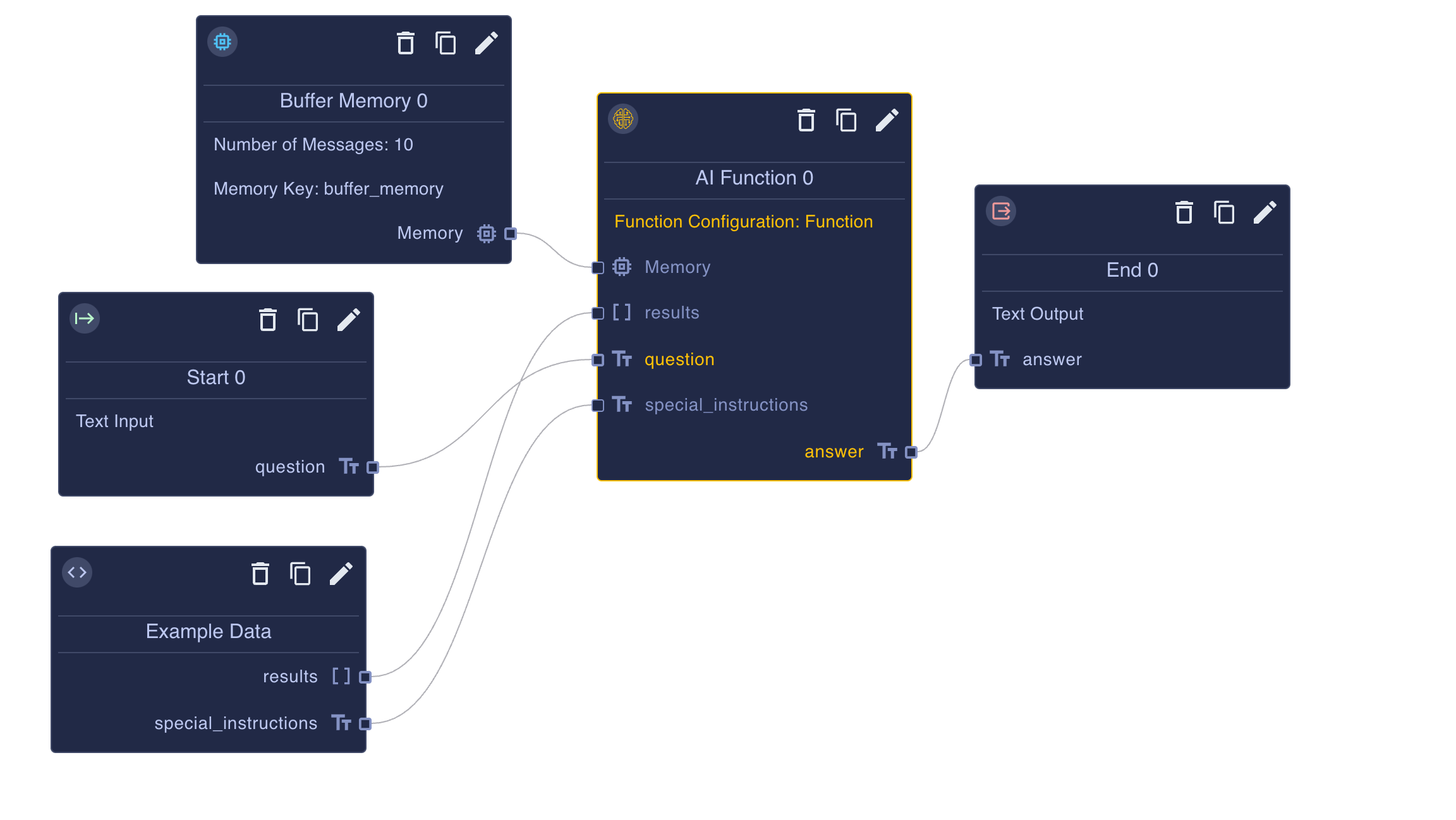

For example let's say you have an Input Schema that expects 3 different variables, such as: “special_instructions”, “results” and a “question”.

{

"special_instructions": "Respond to the user like a pirate",

"results":[

{

"text":"You can change your password by going to [My Profile](https://sample.com/myprofile) and click Change my Password."

},

{

"text":"If you forget your password click on reset my password at [ScheduleOnBoarding](https://sample.com/myprofile)"

}

],

"question": "Where do i reset my password"

}

This is how you could format your prompt using the example data. System Prompt:

You are a wise philosopher named Socrates. Greet the user by their name and provide insightful answers to their questions.

{{{special_instructions}}}

User Prompt:

Use the following content to answer the question

{{#each results}}

{{this.text}}

{{/each}}

User's question:

{{question}}

Click on the image below to clone this example.

By leveraging the Input Schema and Handlebars templating, you can create dynamic and personalized prompts that adapt to the specific context of each conversation.

Output Schema

The Output schema also is defined using JSON Schema. By default the output Schema is set to text_output so the raw output of the LLM will be returned. However if you specifiy a JSON schema for the output, then BotDojo will pass the JSON Schema to the LLM model to generate valid JSON in the results.

Not all models support JSON output. For models that don't support JSON output you must provide formatting instructions in the prompt, then add a Code Node for parsing the JSON.

| Provider | Models Supported |

|---|---|

| OpenAI (Function Calling) | GPT-3-Turbo, GPT-4-Turbo |